I felt compelled to share and amplify this LinkedIn post by Esmè V., a self-described philosopher & educator (stewardship vs extraction in education & organizations), writer, speaker, and host of a weekly podcast The Bell Rebellion who lives in South Africa. Needless to say, I don’t create AI caricatures with my photos though I have been known to generate AI images with other photos, e.g., Capstone Bear. Chatbots aren’t your friend. They’re a tool that remembers everything you tell them, a digital vacuum that sucks up and process all of your personal data.

Here’s something I posted in response to a Facebook friend who said I am a fan of AI: “Rather than labeling me a ‘fan of AI,’ let’s just say that I appreciate its creative and productive uses for personal and professional purposes but am also acutely aware of its drawbacks. The genie isn’t going back into the bottle so it’s best to find solutions to these problems rather rejecting the technology outright.” Full disclosure: I deleted my company’s Twitter (X) account because of the owner and what he represents, use Facebook on a limited basis, mainly to interact with company posts, and check Instagram occasionally for the same reason. Most social media channels are a digital cesspool.

There is one new app that I highly recommend, UpScrolled, developed by Issam Hijazi, a Palestinian-Australian developer with experience at IBM and Oracle. Here’s a brief description: Time to scroll differently. Shadowbanned elsewhere? Not here. UpScrolled is the social platform where every voice gets equal power. No shadowbans. No algorithmic games. No pay-to-play favoritism. Just authentic connection where your content reaches the people who matter most.

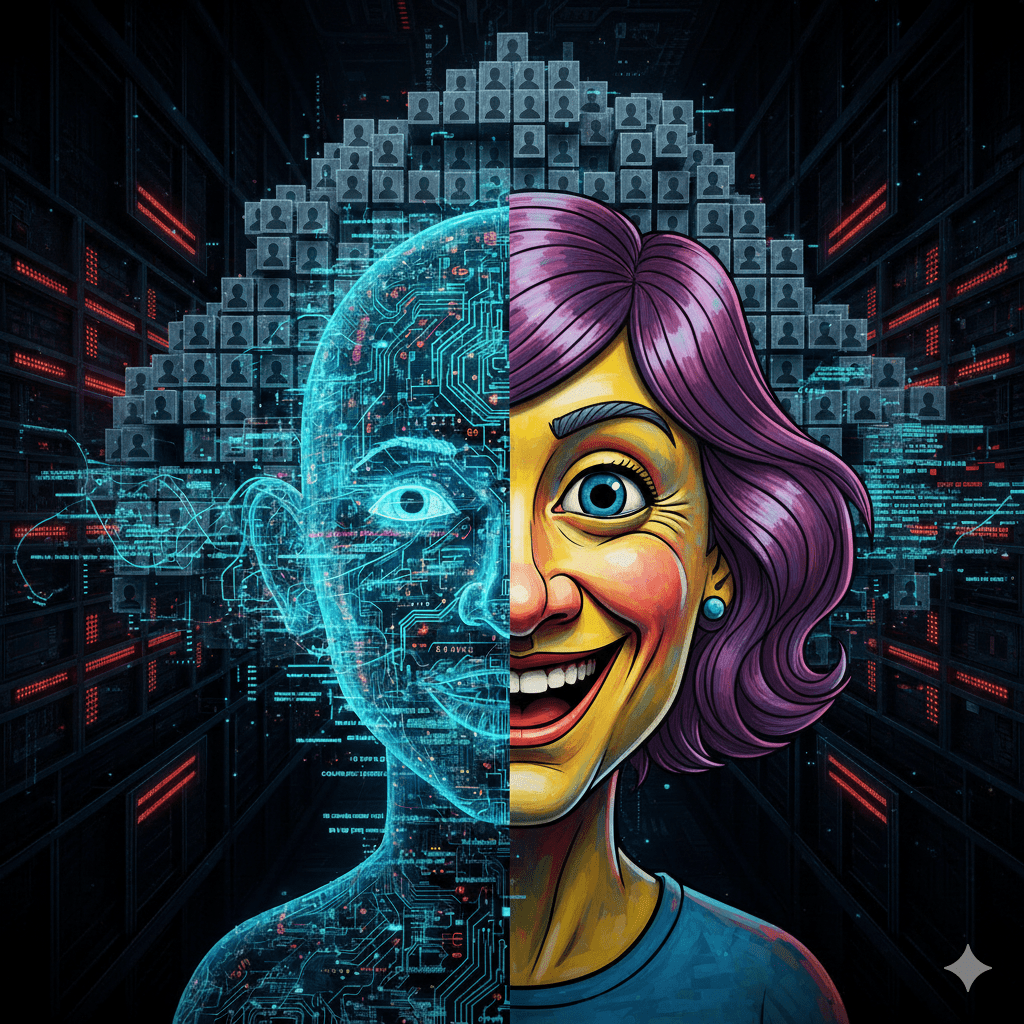

That “AI caricature of me” trend going around …

Let’s talk about what’s actually happening.

When you upload your photo [or describe yourself] and ask Copilot, Gemini, or ChatGPT to “create a caricature based on what you know about me,” you’re providing:

• Your facial biometric data

• Your behavioral/personality data (from chat history)

• The relationship between how you see yourself and how AI characterizes you

This is dual-layer data mining wrapped as entertainment.

I’m seeing this trend particularly prevalent in education circles – teachers sharing the prompts, delighting in the results, modeling the behavior across professional networks.

The data you’re volunteering becomes:

♤Training sets for increasingly sophisticated profiling

♤Permanently harvestable material (remember those nudifying apps?)

♤Infrastructure for behavioral prediction

you’re not consenting to

The pattern being normalized:

☆ AI “knowing you well” feels like relationship, not surveillance

☆ Your identity is entertainment currency

☆ Data harvesting disguised as play

Some schools have already removed all student photos from public spaces because of exploitation risks.

Yet we’re voluntarily feeding the same systems with our own faces and identities.

This isn’t about being paranoid. It’s about being accurate.

These platforms don’t provide these features because they’re fun for you.

They provide them because they’re profitable from you.

Before you share that next caricature prompt, ask:

>>What am I actually giving away?

>>Where does this data go?

>>Who profits from my participation?

>>What future am I normalizing for the next generation?

The stewardship question: What if we treated our biometric and behavioral data with the same care we (should) treat our financial data?

Not every trend is harmless fun. Some are extraction disguised as entertainment.