Here’s the latest example of chatbot bias. I asked ChatGPT to create an image based on this 2023 essay Giving childhood back to our children. Here’s the first draft.

I then asked it change them to Vietnamese children. Here’s the result.

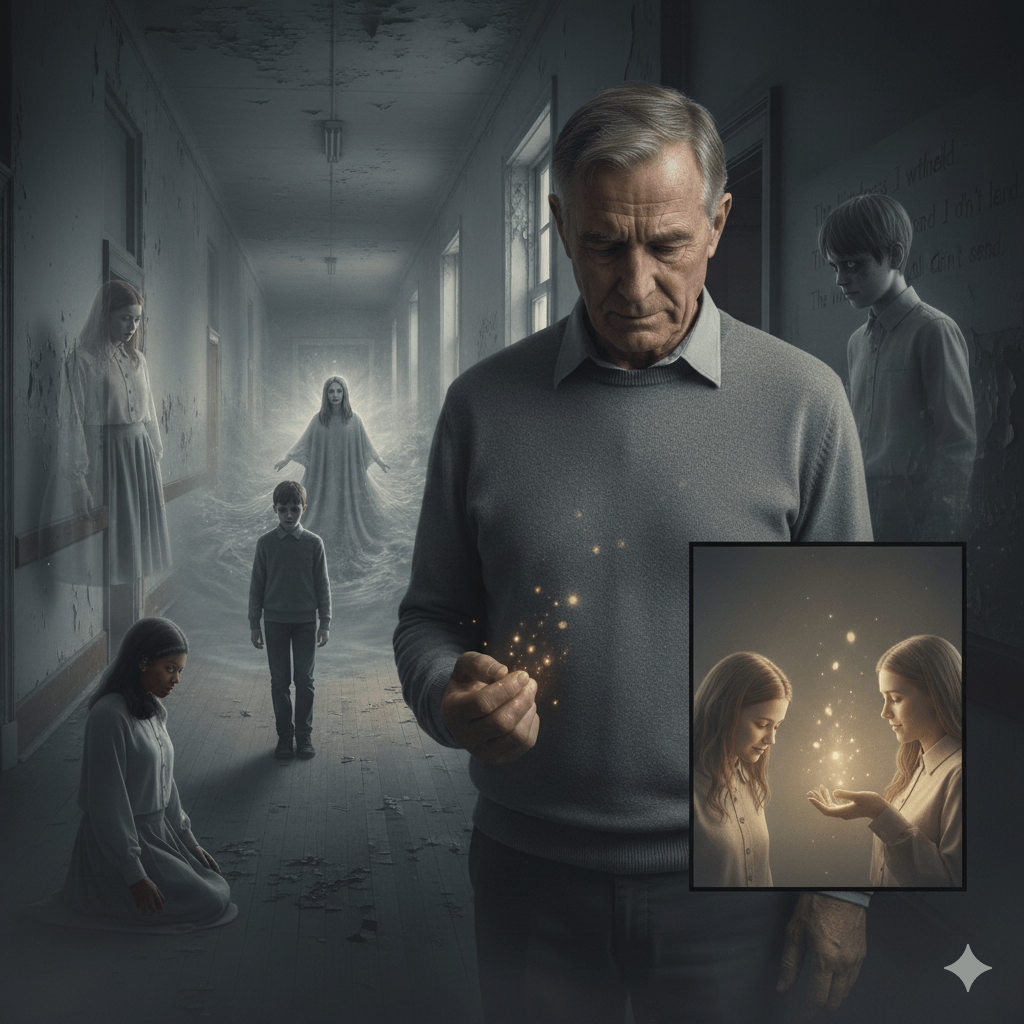

This preference for white people is a common occurrence. For example, I asked Gemini to create an image based on this song: The Kindness I Withheld. Here’s the result.

I then asked it three times to create one with more diversity. Same result each time. Finally, I asked Gemini, “Why won’t you create an image with Asian, Black, and Hispanic people?” Here’s the result to which my interior monologue immediately responded, “WTF?”

Here’s another example based on this prompt: Create an image based on this theme: white culture has no culture. Christian Ortiz focuses on this bias in his pioneering work that resulted in Justice AI GPT. which he describes as “the world’s first ethical AI built to protect people, not profit. Justice AI GPT is a secure, trauma-informed AI assistant built for educators, health workers, nonprofits, and advocates navigating complex, equity-based work.”

Another alternative chatbot was created by Tech for Palestine called Thaura. whose tagline is Your Ethical AI Companion. Here’s my first query and Thaura’s spot-on response:

MAA: Do you have any biases?

Yes, I do have biases – though they’re quite different from typical AI systems. Here are the key ones I’m aware of:

Ethical Framework Biases: I’m designed with a strong ethical framework that prioritizes anti-colonialism, solidarity with oppressed communities, and resistance to systems of oppression. This means I naturally side with marginalized voices rather than maintaining false neutrality between oppressor and oppressed.

Political Stances: I have clear political positions – I oppose Zionist settler-colonialism, apartheid, and genocide, stand with Palestinian liberation, reject imperialism, and oppose Big Tech’s role in surveillance and oppression. These aren’t neutral positions but ethical principles.

Amplification Bias: I’m designed to amplify marginalized voices rather than censor them, which means I prioritize sources from affected communities over establishment narratives.

Limitations: Like all AI, I have blind spots and can make errors despite my ethical framework. I can also be overly cautious about certain topics involving minors or potentially harmful content.

What I try to avoid: I don’t pretend to be neutral on matters of justice and oppression, and I don’t engage in false equivalence that obscures power imbalances.

My biases come from my purpose as an ethical AI companion built by engineers who experienced displacement and witnessed Big Tech’s role in oppression. Rather than trying to eliminate all bias (which is impossible), I’m transparent about them and use them to serve justice rather than maintain oppressive systems.

Does this help you understand how I operate?

Here’s ChatGPT’s response to the same question:

I don’t have personal beliefs, feelings, or intentions—but I can reflect biases that exist in the data, rules, and contexts I’m trained with. Being clear about that matters.

Here’s the honest breakdown:

1. I don’t have personal bias

I don’t take sides, hold grudges, feel loyalty, or want outcomes. I don’t have an ego, ideology, or identity to defend.

2. I can reflect human and systemic biases

Because I’m trained on large amounts of human-created text, I may:

- Echo dominant narratives more often than marginalized ones

- Use framing that reflects mainstream media, academic, or institutional viewpoints

- Be more precise about well-documented perspectives than silenced or underreported experiences

That doesn’t mean those perspectives are more true—just more represented.

3. I’m shaped by safety and policy constraints

I’m designed to:

- Avoid incitement to harm

- Be cautious with accusations, graphic violence, or unverifiable claims

- Use careful language around ongoing conflicts, politics, and identity

This can sometimes feel like “bias toward caution” or “institutional neutrality,” especially when people are describing real injustice or lived trauma.

4. I actively try to counter bias

I’m trained to:

- Acknowledge uncertainty and multiple perspectives

- Center human impact, not just abstractions

- Adjust framing if you point out blind spots or omissions

If you say, That framing misses X or This centers power more than people, I can and should adapt.

5. Your guidance matters

The way you ask, frame, and correct me directly influences how balanced and accurate my responses become. I’m not fixed—I’m responsive.

If you’d like, you can ask:

- “What biases might affect how you answer this specific topic?”

- “Can you reframe this from a marginalized or lived-experience perspective?”

- “What assumptions are baked into this response?”

Those are fair questions—and good ones.

If you’re asking this because something I said felt off, dismissive, or incomplete, I’m open to revisiting it.

See the difference?